SOFTWARE ENGINEERING

The Case for CASE

BY KARL E. WIEGERS

Our exploration of software engineering has revealed that this young discipline includes three main components: methods, procedures and tools. The methods we've discussed include structured techniques for systems analysis and specification, systems design, programming and testing. We really have not talked much yet about the procedures that can help to produce top-quality software, but they include software quality assurance, measurement techniques (metrics) and project management procedures.

In the past few months, we've talked about some "tools" for building models of our systems, defining process specifications and so on. These useful concepts might be classed more appropriately in the methods category, since they define a methodology for modern software development. The tools component of software engineering really refers more to computer software intended to facilitate our task of writing computer software. There are software tools to assist with coding (such as syntax checking editors), documentation (desktop publishing programs, for example), project management, analysis and design and even the automated writing of code.

"Computer-aided software engineering," or CASE, is the term often applied to the idea of using the computer to help us with the steps of program specification, analysis and design, data design and code generation. CASE is the software engineering analog to the computer-aided design, engineering and manufacturing (CAD, CAE, CAM) methods that have been used for years by various sorts of hardware engineers. By now around 100 CASE products are available commercially. It may seem surprising that it has taken so long for software developers to devise programs to help them do their work. But these are very complex software packages, which probably explains why it has taken a while for them to come on the market.

Today I'd like to describe some of the different kinds of CASE tools available and give you an idea of why this is such an exciting subfield of software engineering. CASE has the potential to greatly increase the productivity of individual software developers while also improving the quality of their work. CASE is an extremely important step on the path from computer programming as an art form to genuine software engineering.

CASE flavors

These are the major categories into which we can classify CASE tools.

Toolkit—a group of integrated tools that help you perform one type of software development activity, such as analysis/design, program optimization, code generation and so on.

Workbench—a general purpose development environment, probably based on a powerful workstation that contains multiple, integrated tools to assist with the entire range of activities involved in software development. Sort of "cradle to grave" software engineering assistance.

Methodology companion—software intended to support a particular software development methodology. "Methodology" here refers to a sequence of steps followed while developing software; there are a variety of different approaches, each with its cadre of enthusiasts and detractors. Methodology companions can be either at the toolkit level or at the more comprehensive workbench level.

As I said, these are not simple programs. Many of them are designed to run on mainframes, minicomputers or powerful graphics workstations. A workstation is a super-microcomputer, usually dedicated to a single user and equipped with a high-resolution graphics display, a fast microprocessor, several megabytes of RAM and a very large hard disk. The Atari Mega ST could be used as the heart of such a workstation, if properly configured. A few CASE packages are available for use on microcomputers such as the IBM PC-AT class, but even these are not cheap, with prices ranging between $1,000 and $10,000. There are even some CASE tools for the Apple Macintosh, but don't hold your breath waiting to see something for the Atari ST line. (Anyone for the Magic Sac?)

Now let's take a closer look at some of the amazing things these products can do for you.

Analysis and design toolkits

Remember all those nasty data flow diagrams I showed you in previous articles? If you've tried drawing any of them (and I hope you have), I'm sure you quickly discovered how annoying it can be when you have to make a change. You don't want to redraw the whole diagram, so you get out the old eraser. But after several erasures and corrections, you can hardly read the original page, so you need to redraw it anyway. Things are even worse if the change you make affects several diagrams, such as a parent and child pair, not to mention any changes that need to be made in the data dictionary, structure charts or process narratives.

The temptation is to fall into one of two traps whenever you're faced with changing a data flow diagram. The first trap is the tendency to not make the design change at all, thereby compromising the quality of the ultimate program you're writing. The whole idea behind system modeling is to change the system on paper as many times as necessary to wind up with the right final product.

The second trap is the decision to go ahead and change the program, but not modify the corresponding design diagrams. This means you have an inconsistency between your product (the program) and its documentation (the diagrams), thereby reducing the utility of the documentation. Your documentation should always match the system to which it refers. Erroneous documentation is worse than useless: It can waste your time by letting you chase bugs down blind alleys.

How can you avoid these traps and still not spend your entire life redrawing circles and arrows and boxes? With a CASE analysis/design toolkit, that's how.

A primary feature of all analysis/design CASE tools is the ability to draw and edit the diagrams used for structured analysis and design. These might include data flow diagrams, structure charts, flow charts, control flow diagrams, state-transition diagrams, entity-relationship diagrams and others (yes, I know we haven't discussed all of these yet). The programs are almost always mouse-driven, using a graphics monitor and on-screen menus to let you select the symbols you want to draw.

These CASE tools are not unlike paint programs available for the Atari ST, except that they usually have a fixed set of symbols, rules for how you can combine the symbols (no overlapping, for example) and none of the painting kinds of effects, such as free-form drawing, area fill, color and so on. They also have specialized abilities to check diagrams for errors, log entries in a data dictionary and so on. I tried using Degas Elite as a poor man's CASE tool for the Atari ST, and it just didn't work out very well. An actual CAD program might work better for the drawing, but it still won't know anything about data dictionaries or rules for DFDs.

Most CASE programs support a variety of diagram-drawing conventions. Some even allow you to define your own methodology and design your own symbols. The DFDs I've shown in previous articles are drawn using conventions usually referred to as Yourdon or DeMarco, named after the software engineering pioneers who conceived these modeling techniques. Other DFD methodologies exist; the shapes and uses of some symbols may vary a little, but the diagrams follow the same general ideas. Many of the software engineering methodologies are named after their founders, so if you want to get your name in the SE history books, here's a possible way to do it.

The real beauty of CASE appears when you need to change a diagram. You can easily add new symbols and connect them to the old, remove symbols and their connecting flows, change symbol sizes and labels and move symbols around on the screen. So, at one level, these CASE tools are graphical editing programs. But there's more; read on.

After drawing a diagram, you may want to validate it to make sure you haven't made any syntactical errors. These CASE programs are smart enough to know the rules for drawing the various sorts of diagrams. They'll keep you honest by warning you of any methodology violations. Examples of such errors on a DFD include failing to name an object, not connecting all objects properly with data flows, having data flows ending up in midair rather than terminating at an object and so on. Unfortunately, the computer can't read your mind, so it can't catch logical boo-boos, only syntax errors.

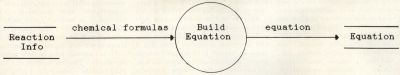

FIGURE 1. Sample Data Flow Diagram Fragment.

These CASE programs also know about the relationships between parent and child diagrams. They can "balance" a child against a parent and identify any missing, extra or incorrect parts. In some programs, when you view the child diagram of a process, the flows into and out of that process automatically appear on the diagram for the child.

By letting the computer help handle the mechanical details, you can concentrate on the logical aspects of system design, which is what human beings do best. The computer can remember all the rules we tend to gloss over. It can keep track of the hundreds of detailed components and relationships in a software project with an accuracy we can't approach. It can catch errors we would never see. The result is more effective software development.

The data dictionary connection

Another feature of the analysis/design CASE programs is the ability to relate the objects in your diagrams to the entries in the system data dictionary. If you've ever tried to thoroughly document a software system, you may have discovered at some later date that your documentation contained inconsistencies, errors or omissions (probably all three). The CASE tool can automatically add new entries to its dictionary, thereby ensuring that you have a complete list at all times. It can insist that you supply a definition for each element before allowing you to proceed with your model building.

The nature of the data dictionary varies from one CASE program to another. Most of them support the conventions I presented in an earlier article called the "DeMarco notation." To refresh your memory, here's an example:

reaction = 3 {formula + coefficient}4

This means that a data flow (or store; we can't tell from this definition) named "reaction" is made up of either three or four occurrences of a combination of an object called "formula," plus an object called "coefficient." See how much more compact the symbolic notation is?

The CASE program can make sure that you don't use a data structure or element on your diagrams in a way that is inconsistent with its definition in the dictionary. The CASE program stores the data dictionary in a database, and you can generate a variety of reports from this information. You might want a list of all the processes which use the data flow labeled "reaction." Or, you might want a list of all the data stores used in the entire system and their definitions. Computers are great for this sort of thing.

The repository

Remember that one of the goals of software engineering is to reuse as much existing code as we can. Hardware engineers usually build things by combining a bunch of off-the-shelf components in a new way. Why can't we? For one reason, we don't have an inventory of all the existing software components, such that we can see if what we need already exists and just grab a copy if it does. For another, our software components are not produced in standard formats, with standard connections for linking to other software components. In the hardware world, you go buy a MC68000 chip with well-defined properties, and you stick it into the right kind of socket with wires coming from the right pins according to published specifications. Not so with software. Some CASE tools are intended to help with these very problems.

All of the information associated with a particular project being developed using a CASE tool is stored in a database or repository. The repository is really the heart of a CASE system. It can contain specifications, documentation, entire diagrams, data dictionary entries and objects that represent the relationships among the components of a diagram.

As an example of this last entity, look at Figure 1. This very simple fragment of a DFD may appear at first glance to contain five objects: data stores called "Reaction Info" and "Equation"; data flows named "Chemical Formulas" and "Equation"; and a process named "Build Equation." But two additional "objects" can be extracted from this diagram. One is the relationship between the three objects Reaction Info, Chemical Formulas and Build Equation; the second is the relationship between the objects Build Equation, Equation (data flow) and Equation (data store).

You already know about reusing actual code; I'm sure you've done it from time to time. But why not reuse a data flow diagram? Or just a part of a DFD? By defining relational objects in the way I just described, we're assembling a catalog of items that could be plucked out of the repository and plugged in wherever they are needed in a future project. This concept of reusability extends well beyond the direct reuse of existing code. By building a system model from a stockpile of existing tried-and-tested components, we are more nearly approaching the enviable situation enjoyed by hardware engineers.

This reusability concept is likely to be one of the real payoffs of CASE, although we really aren't quite there yet. For example, how can we keep track of the objects and relationships already present in our master repository? The computer can define them and store them for us, but how do we find out if the function we need to perform in our new system has already been done before? We could query the database every time we encounter such a relationship, but that's pretty tedious. Wait, I've got it! We'll let the computer query its repository automatically for us. Yeah, that's the ticket!

Some of the most sophisticated CASE tools on the market do include a sort of artificial intelligence system that looks for reusable components in the system being defined. This is a CPU-intensive task, as you might imagine. The situation is akin to having an omniscient system designer peering over your shoulder and pointing out design components he recognizes from earlier projects. Such programs are not yet in widespread use, but someday they will be.

One problem with software developers is that they tend to keep their work to themselves. A consequence is that we constantly reinvent a huge number of wheels, simply because we don't know that someone else has already done the job for us. The multitude of incompatible computer systems doesn't help either. But if a team of programmers all had access to the same master repository of design parts, it would be much easier to reuse existing components. One approach is to have the personal workstations used by individual developers linked to a large computer on which the repository is stored. In the future, I think you'll see more and more professional software development organizations going toward this sort of work environment.

Code generators

Even with all of the computer assistance that CASE can bring to the analysis and design steps, it still boils down to a human being writing some source code eventually. But does it have to? No.

After the analysis and overview design steps are completed, with the aid of CASE tools to guarantee that your design is valid and internally consistent (note that I didn't say "correct"), you still need to write process specifications before you can type out any source code. A couple of months ago, we looked at several different techniques for writing process narratives, including flow charts, pseudo, code and action diagrams (there are other methods, too). The code you write is only as good as the process narratives you write. If the specs are detailed enough, writing the actual code in the programming language of choice is almost a mechanical task.

Suppose your process narratives were written in such a way that a computer program could read them and write the corresponding program code in the correct language? That would certainly save a lot of time, and it would avoid the inevitable typos that creep in because, once again, we're human. As I said, the code still can be only as good as the process narratives. A really smart program could even inspect your process narrative for accuracy and correctness, if it was written in a way that the program could understand. This is the idea behind "code generators." Code generators are a kind of CASE tool that automatically produces source code from a set of module specifications.

CASE tools for analysis and design are sometimes called front-end CASE tools, or "upperCASE." Code generators can be referred to as backend CASE tools or "lowerCASE." The lower/upperCASE nomenclature is probably a feeble attempt at software engineering humor.

There's another kind of code generator idea too. This one consists of a template of code intended for a particular purpose, which is customized according to individual specifications to generate the final source code. An example is a general-purpose program for displaying data-entry screens, providing full-screen editing capability and the ability to validate the user's entries in particular fields according to specified criteria. A template program for this purpose would be merged with specific information about each screen, the fields on it, allowable field values and so on, to produce complete source code.

A number of code generator programs exist at this very minute, ranging from simple Atari BASIC programs that write a subroutine for handling player-missile graphics, on up to mainframe programs that automatically produce valid COBOL source code from appropriate process specifications. The source code produced can either be in the form of a full, executable program, or it might be a skeleton that still needs to be fleshed out manually. For example, the template-type programs I described above may have some provision for you to attach your own individual modules into the generated code. This allows you to take advantage of the computer's program writing ability, while still being able to customize its output to meet your specific needs.

Once a system has entered the maintenance phase, changes undoubtedly will need to be made. If the program was written by a code generator, you have a couple of choices for maintenance. One is simply to forget about the code generator, and just do maintenance on the source code it provided for you (which you may or may not have customized). An alternative is to change your process specifications and regenerate the code for that module, and then re-integrate that module with the rest of the system.

Some CASE products force you into the latter mode, since their code generator directly produces object code, not source code. You couldn't maintain the source code if you wanted to, since there isn't any! This is probably a better approach if you have a lot of confidence in your code generator, since you're likely to introduce errors by manually modifying source code while the code generator probably won't. However, this makes it nearly impossible to customize the code generated by the computer.

Code generators have both good and bad aspects. On the plus side, they can remove the tedium and errors associated with manual code creation using a program text editor. They are reproducible: Each time you generate code from the same set of specs, you'll get the same product. The code produced by a particular code generator will have a uniform style, whereas if you gave exactly the same specs to several human programmers, all the programs they write will look different. They can produce some documentation of your system automatically, such as module hierarchy diagrams, lists of variables and their characteristics and data interface information.

On the other hand, the code produced by the generator often isn't very efficient, especially that created by one of the template-type programs I mentioned. Of necessity, such programs are generalized in their purpose, and as such may produce redundant code, introduce variables from the template that you don't need for your specific application and so on. The smarter the code generator, the less of a problem this will be. But a skilled human programmer will nearly always be able to write more efficient code than any automatic code generator.

(Remember, however, that there are several kinds of "efficiency" surrounding computer software: programmer time, computer time and user time. Sacrificing some computer time in order to save programmer time usually is a cost-effective move.)

Code generators that work directly from your specifications may produce more efficient code, but the quality of the code is restricted by the quality of the specification (as always). You won't have the luxury of writing the process narrative any old way you like. You'll have to follow a set of rules intended to let the code generator understand your intentions. You might be able to run the process specification through some kind of automated preprocessor, that will check for syntax and structure problems, before actually producing code.

You've probably already used one variety of code generator: a compiler. A compiler works from specifications (source code) to produce object code. Smart compilers may actually restructure your source code into a more efficient format, although they don't normally tell you what they did so that you could improve the source code yourself. Other CASE tools to optimize and restructure source code for improved efficiency and maintainability do exist for certain languages and computers however.

Other kinds of CASE

The analysis/design and code generator CASE tools apply generally to the classic software development life cycle we've been exploring in this series. But there are alternative approaches to building software systems (to be covered in a future article), and CASE tools are available to help with them too. For example, another way to build systems is to begin with a partial specification and build a prototype system to address those specifications. By repeated iterations of modifying the specifications and building a new prototype, you can evolve in the direction of the final system.

At least one CASE package is available that's designed specifically for this prototyping life cycle. The key to effectiveness in this development mode is rapid turn-around from specification change to creation of the new prototype system. One large chemical company has actually gone into the commercial software business, creating systems for other companies using a CASE environment that permits such rapid interative prototyping. The nature of the projects that can be produced using this technique is somewhat limited, but if your problem falls into that category, it's possible to get a running system in place much faster than using the sequential life cycle we're used to.

Other products touted as CASE tools include so-called screen painters and report generators. A screen painter program helps you design full-screen data entry and menu panels, possibly writing some of the code that drives the panel. A report generator makes it easy for you to design printed reports or screen displays based on such tasks as querying a database. Again, it may actually write the code that will produce the desired formatted output.

Such products sometimes are grouped with other "fourth generation techniques" as another CASE classification. Fourth-generation languages and techniques are software aids that make it easier for a user to program a computer to perform some task, without writing reams of explicit procedural code. Most of the languages you've heard of are procedural (third-generation) languages, like C, FORTRAN, Pascal and BASIC. Fourth-generation languages, or 4GLs, often are tied to relational databases; they are intended in part to make it easier for end users to access the information in the database without needing the services of an official computer programmer.

Still other sorts of CASE software deal with the thorny issues of project planning, control and management. While critical for large-scale software development, they don't apply too much to cottage industry types.

Getting into CASE

The good news is that CASE is a very active subfield of software engineering at the moment. There are many products on the market, and they are increasing in sophistication as they decrease in price.

The bad news is that most CASE programs are designed to run on a limited number of hardware systems, and Atari isn't one of them. I suspect it never will be. The hardware limitation isn't fatal, but it certainly cramps your style. I've used a PC-based CASE program to design systems that will run on an IBM mainframe computer. This works fine, except that there's no way I could use a PC-based code generator program to produce output that would be useful on a mainframe.

How about the oft-advertised claim of portability of applications written in the C language? Maybe someday we could use a C-code generator (there aren't many yet) on one computer to produce code to be executed on another. Maybe.

If you have access to a computer system for which CASE tools are available, you may be able to use them to design software to run on an Atari. I'm sure most of you don't fit into that enviable category, and most CASE tools are still priced well out of reach for the individual developer, let alone hobbyist. I wish I could be more encouraging.

The bleak future of CASE and the ST doesn't mean you've wasted your time reading this article. Before implementing CASE tools for systems development, you should already have bought into the methodology on which the CASE program is based. Hence, you surely aren't wasting your time by learning these software engineering techniques, such as data flow diagramming, now. You'll be ready to use CASE whenever you have the opportunity this way.

The future of CASE

Computer-aided software engineering is still in its infancy. There are still large gaps between front-end analysis/design tools and code generators, in most cases. This is equivalent to saying that there are a lot of CASE toolkits available, but not very many CASE workbenches. In the future, more software development will be done in the workstation environment, with each developer having access to an array of powerful CASE tools as well as to a large, shared repository of design components from previous projects. The multitude of computer languages, operating systems and hardware in the world right now will impede progress toward this goal, unfortunately.

Future CASE systems will probably support speech recognition and voice synthesis, which have the potential of being more efficient man/machine communication tools than mice (mouses?) and on-screen messages. CASE programs will become increasingly intelligent, with natural-language processing ability. The goal is to let the computer handle much of the burden of taking abstract design thoughts from us scatterbrained human beings and converting them into the rigorously structured form required for execution by machines.

What do we expect to get from CASE? Lots of benefits, including vastly improved quality and much increased productivity. There aren't a lot of data available yet, but the kinds of productivity gains attributable to CASE seem to range between 25% and 2,000%. This is a pretty big range, and it's hard to draw many conclusions from it. The productivity gains depend on how lousy your current development efforts are, just what CASE tools are being used, the nature of the software projects being undertaken, how well-trained the software engineers involved are and even the methods used to measure productivity.

My personal experience suggests that the biggest benefit of using front-end CASE tools is in software quality, not necessarily productivity. The ability to have the computer check your design for errors and inconsistencies is a real step forward, since preventing bugs is highly preferable to searching for them during testing. The real productivity increases will come from increased reuse of existing code and design components.

Automatic code generation offers advantages in both quality and productivity. I'm willing to sacrifice some execution efficiency if someone or something else is writing my program for me, since the net result is a decrease in the time I spend working on the project. As hardware and computer time costs continue to decrease, we can trade computer time for human time.

The short answer is that computer-aided software engineering is probably the best hope for beating the "software crisis" into submission. That makes it pretty important to all but the most casual computer hobbyists.

After receiving a Ph.D. in organic chemistry, Karl Wiegers decided it was more fun to practice programming without a license. He is now a software engineer in the Eastman Kodak Photographic Research Laboratories. He lives in Rochester, New York, with his wife, Chris, and the two cats required of all ST LOG authors.